How to run local LLMs with ease on your Mac and PC.

If you want to run LLMs privately you are in luck because now we have not one but two solutions that enable you to run LLMs securely on your machine.

LLMs have been around for awhile now and have become even more popular since the launch of ChatGPT website and API access. Forget relying on someone else's computer for powerful language models!

While options like ChatGPT, Google Bard, and Claude live in the cloud and can be expensive, you have a choice. Run many powerful opensourced language models like Llama 2, Zepyhr-7B, Mixtral 7B, Microsoft Phi-2 2.7B on your own computer. With LM Studio, you can unlock the power to run practically any LLM locally with ease and potentially save money. Take control of your language model experience!

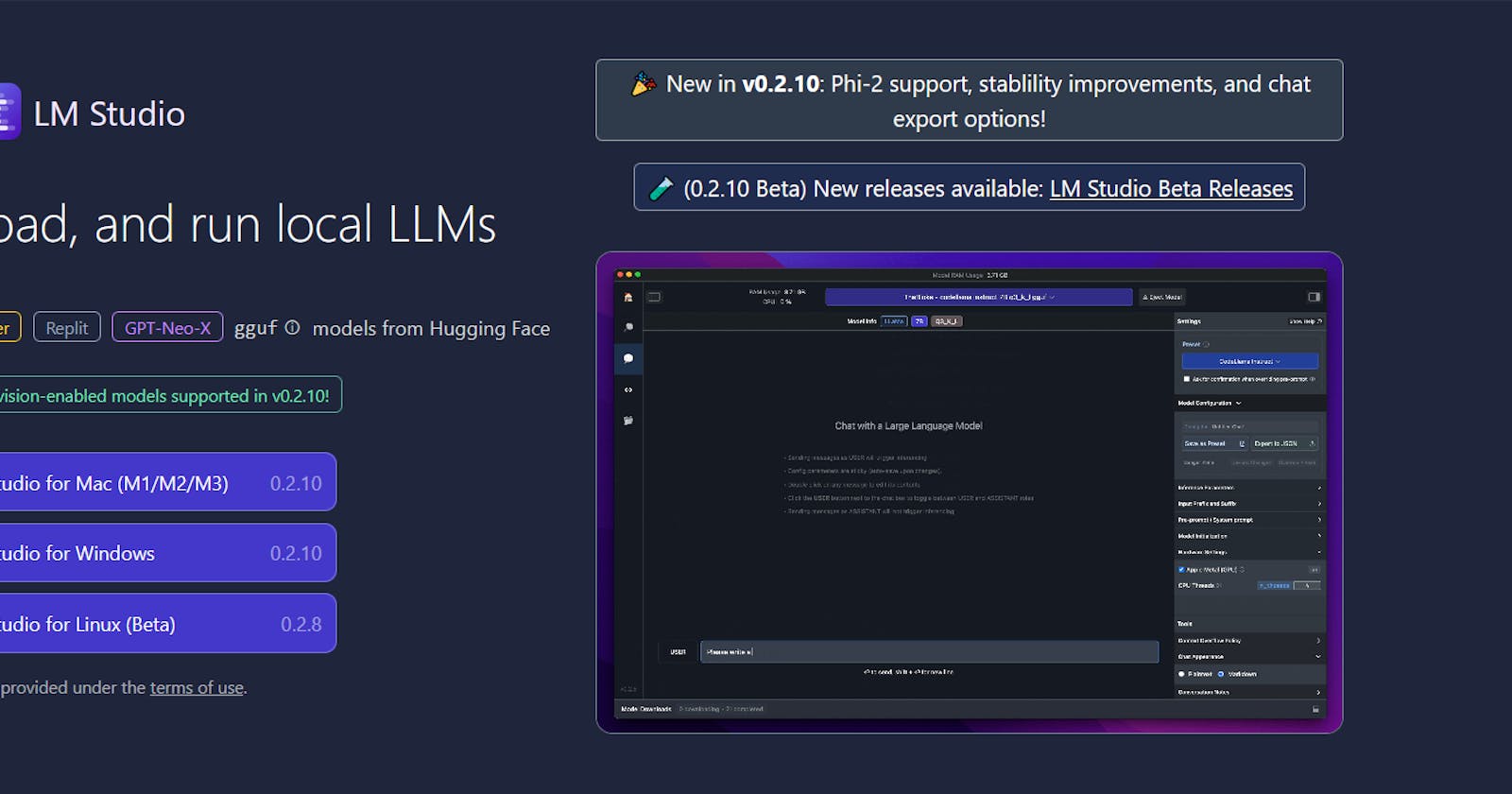

1. LM STUDIO

Setting up LM Studio on Windows and Mac is hassle free and the process is the same for both platforms.

What are the minimum hardware / software requirements?

· Apple Silicon Mac (M1/M2/M3) with macOS 13.6 or newer

· Windows / Linux PC with a processor that supports AVX2 (typically newer PCs)

· 16GB+ of RAM is recommended. For PCs, 6GB+ of VRAM is recommended

· NVIDIA/AMD GPUs supported

There are two ways that you can discover, download and run these LLMs locally:

· Through the in-app Chat UI

· OpenAI compatible local server

All you have to do is download any model file that is compatible from the HuggingFace repository and get started with using it.

Get Started

- Your first step is to download LM Studio for Mac, Windows, or Linux(Beta), which you can do here. The download is roughly 400MB, therefore depending on your internet connection, it shouldn't take too long.

2) Your next step is to choose a model to download. Once LM Studio has been launched, click on the search icon to look through the options of models available. Most of these models are several gigabytes in size, therefore it may take a while to download.

Popular models:

• TheBloke/phi-2-GGUF, Q6_K or Q4_K_S version

• TheBloke/Llama-2-7B-Chat-GGUF

• TheBloke/OpenHermes-2.5-Mistral-7B-16k-GGUF (Q8)

• Dolphin 2.1 Mistral 7B - GGUF

• TheBloke codellama instruct GGUF q_3_l

- Once the model has been downloaded, click the Speech Bubble on the left and select your model for it to load and you re ready to chat!

There you have it, that quick and simple to set up an LLM locally. If you would like to speed up the response time, you can do so by enabling the GPU acceleration on the right-hand side.